Commons:Valued image candidates/Perceptron-unit.svg

Jump to navigation

Jump to search

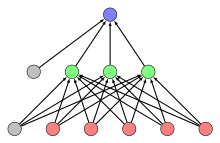

Perceptron-unit.svg

| Image |  |

|---|---|

| Nominated by | MartinThoma (talk) on 2016-05-30 11:35 (UTC) |

| Scope | Nominated as the most valued image on Commons within the scope: Single Perceptron unit |

| Used in | Global usage |

| Review (criteria) |

|